“LudicrouslyFastAPI:” Deploy Python Functions as APIs on your own Infrastructure

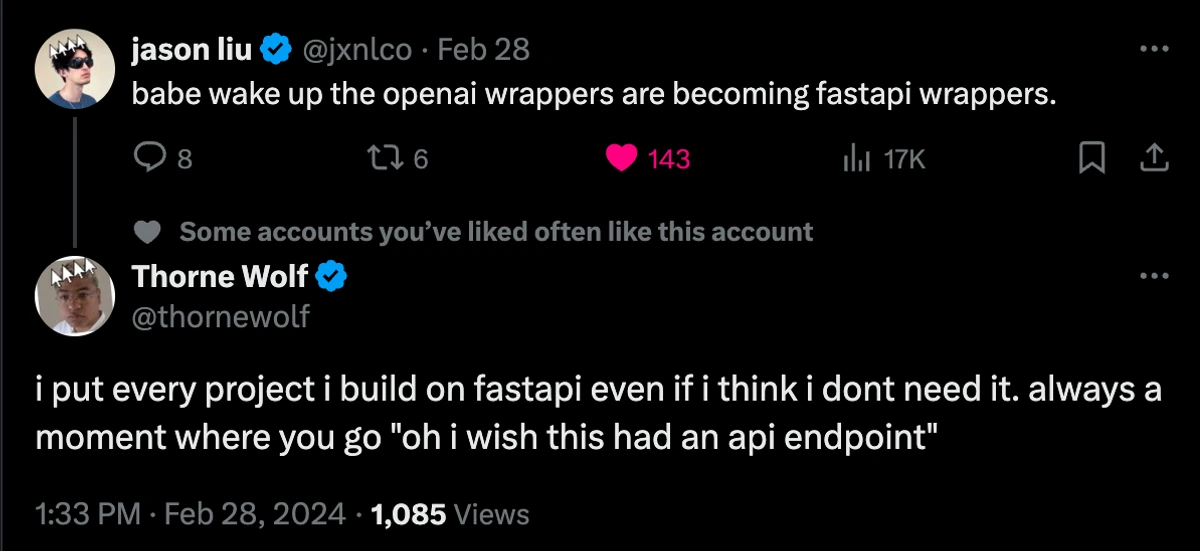

What if we made building and deploying FastAPI apps even faster? 🤯

CEO @ 🏃♀️Runhouse🏠

FastAPI and Flask are elegant and effective tools, allowing you to stand up a new API endpoint on localhost in minutes just by defining a few functions and routes. Unfortunately, serving the endpoint on localhost is usually where the fun ends. There are some daunting gaps between you and a shareable service from there:

- DevOps learning curve: The minimum bar for publishing an app to share with others is simply way too high. AI researchers and engineers generally do not want to learn all about Nginx, Authentication, Certificates, Telemetry, Docker, Terraform, etc.

- Boilerplate and repetition: Even once you’ve learned your way around DevOps, packaging each Python function into a service requires reintroducing that boilerplate over and over. The middleware and deployment must be wired up in each new application.

- Iteration and debugging: Debugging through HTTP calls is challenging and the iteration loop is slow - at best you’re restarting the FastAPI server, at worst you’re rebuilding all your artifacts and deploying anew.

What if you could turn any Python into a service effortlessly, without the DevOps knowhow, boilerplate, or DevX hit?

Deploying and Serving with Kubetorch

We’re excited to share a new suite of features in Kubetorch by Runhouse to provide this zero-to-serving experience, taking you from a Python function or class to an endpoint serving on your own infrastructure. All this, plus a high-iteration debuggable dev experience with lightning fast redeployment. Existing Runhouse users will recognize the APIs - simply send your Python function to your infrastructure and we’ll give you an endpoint back that you can call or share with others.

# welcome_app.py import kubetorch as kt def welcome(name: str = "home"): return f"Welcome {name}!" if __name__ == "__main__": cpu_box = kt.compute(cpus="2", open_ports=[443], server_connection_type="tls") remote_fn = kt.function(welcome).to(cpu_box) print(remote_fn("home")) print(remote_fn.endpoint())

Et voila, you have a service running on the cloud of your choice (in this case, an EKS cluster for us) which can handle thousands of requests per second. In this short stretch of code, Kubetorch is:

- Launching the compute

- Installing Caddy, creating self-signed certificates to secure traffic with HTTPS (you can provide your own domain as well), and starting Caddy as a reverse proxy

- Starting a HTTP server daemon on the remote machine

- Deploying the function into the server

- Giving you a client to call and debug the service, as well as an HTTP endpoint

When we rerun the script, most of the above is aggressively cached and hot-restarted to provide a snappy and high-iteration experience directly on the deployed app. This is much of the magic - we’ve worked for the last year to achieve what we feel is the best possible DevX for deploying and iterating Python apps on your own remote infrastructure. If we made a code change and reran the script, you can see that the new app is redeployed instantly, faster than it would take to restart FastAPI or Flask on localhost!

$ python welcome_app.py INFO | 2024-04-02 06:04:09.956727 | Server my-cpu is up. INFO | 2024-04-02 06:04:09.957834 | Copying package from file:///Users/donny/code/rh_samples to: my-cpu INFO | 2024-04-02 06:04:11.094162 | Calling base_env.install INFO | 2024-04-02 06:04:11.686541 | Time to call base_env.install: 0.59 seconds INFO | 2024-04-02 06:04:11.699101 | Sending module welcome to my-cpu INFO | 2024-04-02 06:04:11.746858 | Calling welcome.call INFO | 2024-04-02 06:04:11.766914 | Time to call welcome.call: 0.02 seconds Welcome home! https://54.173.54.42/welcome $ curl -k https://54.173.54.42/welcome/call -X POST -d '{"name":"home!"}' -H 'Content-Type: application/json' "Welcome home!"%

This is not meant to replace FastAPI - it’s built on top of it to provide more extensive automation, faster iteration (e.g. hot-restarts), and more built-in DevOps. It’s a great place to start with any project, and likely all you need for offline jobs, internal tools you’ll share among your team, and quick UAT testing endpoints. If you eventually decide you’d like to deploy your app through an existing DevOps flow or into an existing container, you don’t need to rework your entire app, as Runhouse is also fully capable of local serving like a FastAPI or Flask app (and like FastAPI, takes advantage of Python async for high performance):

Plenty to do

Kubetorch by Runhouse is a powerful way to quickly stand up Python services on your own infrastructure. Our mission is to make it easy for anyone to build Python apps on any infrastructure, and we have an exciting roadmap ahead.

Getting help

If you have questions, feedback, or are interested in contributing, feel free to open a Github issue, message us on Discord, or email me at donny@run.house.

Stay up to speed 🏃♀️📩

Subscribe to our newsletter to receive updates about upcoming Runhouse features and announcements.

Read More